October 7, 2009

Module : Console Development Part 2

Task

To write an individual project, to be written in assembly, C or C++, cross compiled using Sony’s patched GNU toolchain (psp-gcc or ProDG compiler and make) under the Cygwin environment, and running on the DTP-T1000A hardware tool (PSP devkit).

The project does not have to be a full working game (though it might optionally be a game), but a small tech demo that can be launched on a PSP devkit using the Windows debugger pspdbg. The demo design is almost completely up to me, but it must pack as many features as possible into the very tight technical constraints described below –

Technical Requirements

This assignment allows you to act freely within tight and well-defined constraints. You are encouraged to be as creative as possible with your demo, as long as it keeps within the following limits. The point is to use this creative invitation as a mission to squeeze as much as possible into this tiny space:

Technical essentials

_ No more than 1,024 bytes of total static data in (pre-linkage) object files

_ No more than 4,096 bytes of machine code in (pre-linkage) object files

_ Frame rate keeps to at least 30FPS after the first 30 secs of runtime

Design essentials

_ When executed on PSP devkit, PRX produces on-screen animation

_ Demo can be left unattended and will continue to animate (e.g. looping)

Platform essentials

_ Demo code is written in MIPS asm / C / C++ (or some mixture of these)

_ Demo compiles using either psp-gcc or ProDG’s PSP compiler

_ Does NOT use ProDG VS2008 Integration for build (recommend make)

_ Links with official PSP runtime libraries to produce executable PRX file

Optional features

_ PRX built from multiple source files with clearly distinguished roles

_ Demo includes user interaction elements, possibly even gameplay

_ Build options to include on-screen debug info code (e.g. FPS counter)

_ Code architecture uses clear techniques to minimize code size

_ Memory usage optimized through choice of type, layout and allocations

_ Audio libraries and software synthesis used to add an audio track

_ Frame update calculations optimized using vector instructions in VFPU

_ Inline MIPS assembly used to optimize critical sections of source code

Initial Ideas

L – System

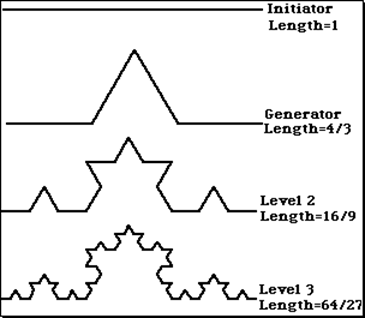

The Lindenmayer system is a parallel rewriting system made famous by Hungarian biologist Aristid Lindenmayer. L – Systems can be used to generate fractals by iterating through a specific set of rules without changing the rules. A procedural approach creates content “on the fly” which would be advantageous to my project due to the small data constraint. One system I have been looking at is the Koch snowflake –

Alphabet: F

Constants: +, –

Axiom: F++F++F

“F” means to draw forward

“+” means to turn right 60 degrees

“_” means to turn left 60 degrees

Starting with a Line segment and iterating through each line uses the following

- Divide line into 3 equal segments

- Draw a triangle using the middle segment as the base

- Remove the line that was the base

Plasma Effects

The plasma effect has a history of being used in the demo scene and after looking at some examples online, there are also a lot of optimization methods that can be implemented. The main theory behind creating plasma effects is by combining 2 functions and redrawing the canvas using a 256 color palette. This gives a “plasma” style flow of colors which are redrawn based on a time value with the illusion that with every new step, the colors will flow.

Base function –

f (X, Y, T) = n

X and Y being the co ordinates for the Canvas, T being the time and n being an integer between 0 and 256 representing the color palette.

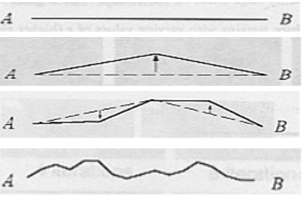

Mid Point Displacement

I have been looking at this in another module and have successfully been able to implement it using Microsoft’s directX platform. This is done using a “plasma fractal” or a “diamond square” to create mountain like terrain based on randomly displacing the midpoint of a line.

- Get midpoint of line and displace by some random height value.

- [-h, h]

- Repeat on new line segments.

- Multiply height range by 2 – roughness.

- Repeat until detail is achieved.

PSP Demo Scene – Assembly, C++ and Sony’s SDK

For my “Console Development” module I was given the task of writing a small ‘demo scene’ style project written in assembly, C or C++, cross compiled using Sony’s patched GNU toolchain (psp-gcc or ProDG compiler and make) under the Cygwin environment, and then being able to run it on the DTP-T1000A hardware tool (PSP devkit).

The project did not have to be a full working game (though it was an option), but instead some sort of small tech demo that can be launched on a PSP devkit using the Windows debugger pspdbg. The demo design was almost completely up to me, but it must pack as many features as possible into very tight technical constraints.

This assignment allowed me to act freely within tight and well-defined constraints. I was encouraged to be as creative as possible with my demo, as long as it keeps within the pre-described limits. The point was to use this creative invitation as a mission to squeeze as much as possible into this tiny space of data.

My Demo

Please check out my development report to see what I came up with! videos and picture to be included shortly.

October 6, 2009

Module : Introduction to 3D Graphics – Part 3

Post Christmas

The first thing I decided to do was to rename the project and re – factor the code into a more logic order. Cleaning up the code over the Christmas was a good place to restart as I started to notice small bugs and inconsistencies which lead to big problems first time around. The biggest problem I had originally was with my matrix class and was due to human error and found that copying and pasting code can indeed be a very dangerous technique. This I learned the hard way.

The fact that my original hand in wasn’t much of a demo was also a concern and I decided that I wanted my renderer to be more of a demonstration of what I had done and changes made through the rendering pipeline. I also wanted to include a frame per second counter to test the efficiency of my code and spot any bottle-necks that I could optimise.

For the time being though I decided that fixing up the code and naming conventions such as local member variables starting with a _ would help in keeping code tidy. I renamed the namespaces and started to plan on what else I could improve on with the time I had left.

The main things to get into my demo was Ambient and directional lighting, an improved working camera, back-face culling working properly and to make my code into a working demo.

From Christmas onwards

Due to time needing to spend on other modules, I couldn’t spend all my time working on my renderer and implement every single feature. Below is a list of changes made and how long they took –

- Tidy Code – 1 hour

- Change Matrix class – 2 hours (around 12 debugging before initial hand in)

- Change Camera class – 2 hours

- Fix back face culling – 4 hours

- Add a working polygon sort method (3 hours)

- Improve pipeline – 3 hours

- Implement Ambient lighting – 6 hours

- Implement Directional lighting – 3 hours (Longer in correcting Polygon class and back – face culling)

- Set up an FPS counter via tutorial on pdf (1 hour)

- Display text and numbers on screen via MSDN example (2 hours)

- Implement case based drawing based on time (2 hours)

Notable Code Changes / Addition

In the camera class I added a GetCameraPos() that returned a vector. This was then used in the MyApp render call in the calculation of back faces. The object pointer calls the back faces method and passes the vector in. This was an important addition as I create 2 separate cameras in my renderer and rotate them around the object models. This meant that originally the back facing was being calculated wrong and only half of the model was being drawn. This took some time to get my head around as I didn’t understand why the back face culling wasn’t working. I added the std::sort call to my Object sort() method after working through the online tutorial at MSDN as recommended by the pdf. Just above that method in the Object.cpp I added the bool UDGreater method that checks if one Polygons._zIndex is greater than the other and sorts them. The parameter _numPolys – 1 is there to go to the last element -1 which I think would be the original one. This required me to add an overloading operator to the Polygon class and I also had to use my namespace in the call as I think the compiler thought polygon was of a type used in windows. I also added a Vector to represent the normal’s for lighting computations to this class.

A huge difference making was in my calculation of blackfaces. When working out the _polys[i].normal vector by getting the cross vector of 2 vectors created by subtracting the indices in the model data, I realised I was using the original verts before they were transformed (which later on gave me weird lighting effects). This was just a small change but it contributed in a huge way as it gave me correct back face culling.

The biggest challenge I next faced was getting lighting into my application. Although the tutorials given are relatively clear now, I didn’t understand how to call and manipulate the Gdiplus color fields. It took me a long time to get my head around the coding of implementing lighting as the maths behind it I thought was relatively straight forward. Once I understood how to use the colour fields I managed to implement ambient lighting (after remembering to store the color back in the polygon). Directional lighting took a little longer to implement as the problem described earlier with calculating blackfaces was giving me some strange lighting results. The only difference with the directional lighting calculation was adding the calculation of the dot product value taken from each poly’s normal with the specified vector for representing where the light was placed. I also added a TurnOffLighting() method that simply looped through each polygon in an object and set the colour to black.

I added an FPS counter and was hugely surprised with the good solid frame rate that my renderer produced. The only bottleneck seemed to come from calling the drawWireFrame() in the rasterizer.cpp. The frame rate takes a massive hit compared to all other calculations even when drawing lighting as well. I also included a clamping method to clamp my ARG lighting values that I can call instead of having to keep doing it in each lighting method.

One of the last things I have done was to add a series of “if” statements in the render method that turns on and off each individual feature giving my demo a more “demo” like feel. The last “if – else” simply paints the screen black to give the impression the rendering has finished.

I am pleased my renderer now resembles a demo and would like to continue to improve it. I attempted to add my own rasterizer and implement Gouraud shading. I had previously tried to implement both of these but will need more time to get them working effectively.

C++ Software Renderer

This is my graphic’s renderer which was written in C++ as a part of my “Introduction to 3D graphics” module. Using the Gdiplus library and a custom .md2 model loader, my renderer draws 2 models spinning on their own independent axis and lights them using a range of mathematical calculations and techniques. The core focus of this module was on learning the theory behind graphical programming and then applying what I learned into practice. This was particularly maths heavy and served as a full introduction to the C++ language and in particular object orientation.

Features of my demo

-

Fully implemented Vector class including specific functionality for mathematical calculations

-

Fully implemented Matrix class offering the same functionality as the Vector class

-

Custom Camera class used for representing transforms and displaying each model

-

.md2 Model loader which is responsible for loading the vertex data for my models

-

A fully working Rasterizer which is used to draw wireframe and solid brush versions of my models

-

Ambient light calculations which are shown on both models independently

-

Directional lighting which is shown on both models

-

Backface culling

-

Depth sorting

-

Rotation and Scaling functionality

For a detailed report of how I implemented these features please head over to my development report where I discuss my programming techniques in more detail.

I am still currently working on this project with hope of including specular lighting, Gouraud shading, texturing and my own custom rasterizer.

June 22, 2009

Module : Interactive 3D Graphics Part 1

The big brother module to introduction to 3D graphics, interactive took things to the next level. This module focused on implementing what we had learned in intro and advance on it theoretically and practically. Instead of writing our own Matrix and Vector classes, we now had the chance to use inbuilt ones. Using Microsoft’s DirectX, we were now looking at implementing more complex dynamic scenery. As well as focusing on the pipeline and the way graphical data is processed, I was also required to look at more efficient techniques for storing and loading resources.

The Project

By far the most ambitious and certainly technically the most difficult, I was required to make a simplified version of the popular Nintendo Wii game De Blob. I was required to implement a simple menu system, music and full game mechanics used in the full version of the game. I was required to implement collision, a dynamic camera and a full environmental rendered scene. Additional features included Bounding volumes, A State system, UI components and Shader effects.

Module : Mobile Devices Part 1

Introduced in the second semester of my computer games programming course at the University of Derby, Mobile Devices focused on writing applications and games for platforms such as mobile phones and PDAs. For this module we would be using J2ME and the Netbeans development environment to make our applications and so would be writing code in Java. This is the first time I have ever used Java but do have experience with C# which is very similar syntactically to Java. There are some slight differences when using Java as opposed to C# but nothing incredibly difficult to be concerned with.

The Projects

For this particular there was 2 pieces of work to comit as our final hand in to make up my final grade. The first assignment required me to write a paper constrasting and comparing the Java ME Netbeans platfrom to another one of my choice. The paper should compare and contrast these two platforms, specify appropriate strengths and weaknesses of both systems (this may include user interface issues, but should not focus intently on these), and propose a choice of platforms based on some specific intrinsic quality of one system (not a user interface feature, but some internal functionality that one system has over the other).

The other project required my to write a game or application suitable for a mobile device that supports J2ME. This required a full written proposal and a development log supporting the application.

Module : Applied Game Development Part 1

Introduced in the second semester, applied game development was a group based project in which I was placed in a team with other programmers and an art team to create a prototype of game using Emergent’s Gamebryo engine. The game itself had to meet a set amount of requirements and demonstrate evidence of organization, planning and use of appropriate industry techniques.

The Project

The way this module works was slightly different to the other modules in the second semester as in was directly linked with the personal and professional development module. This meant that we were being graded for 2 different modules based on the work from this singular project. For the Applied Game Development side we were graded on a more technical spectrum such as code, design document, personal contribution as well as weekly tasks and set milestones where as it the Personal and ProfessionalDevelopment module we were being marked on how well we worked as a team and in what ways we overcame obstacles affecting development. Our final hand in was assessed by world re-noun Frontier programmer David Braben and some other members of his team.

Deliverables

Software development portfolio – Detailing the practices and research carried out by our studio.

Personal development report – Individual weekly development reports on my personal contribution to the development process.

A final playable demo – Complete with source code marked on stability, performance and code style.

As well as the final hand in, we had 3 milestones along the way in week 1, week 9 and the final demo in week 12.

My Team

June 19, 2009

Module : Console Development Part 1

Console development stretched over both semesters in the second year as part of my degree and was by far the most challenging. The main focus of this module was to produce high performance code for an external fixed hardware platform. A huge part of this module was understanding the relationship between code performance and platform hardware architecture which meant looking much deeper into how computer systems work. The first semester was spent looking at low level programming and performance optimisation and really getting involved with machine level code whilst the second semester allowed me to test what I had learned and apply it to development on a PSP development kit. Having access to equipment and tools such as this once again shows Derby University’s amazing facilities for my course and demonstrates why it’s one of the best courses around for computer games programming.

The Project

The coursework for this module was not posted until the second semester and so the first semester was spend researching more theory based tasks and getting used to coding at a lower level than what we had been used using visual studios. Instead we worked through on-line MIPs tutorials on Mars’s Spin environment writing low level machine code working directly with registers.

In the second semester we were introduced to developing and profiling on the PSP platform before eventually being required to write a small 4kb raw data demo for the PSP. It is important to point out at this point that under no circumstances can I post or even discuss Sony’s code as it would be a breach of contract and could land myself and the University in trouble and so I will instead be discussing methods and approaches to solving problems I came across in my project.

For the first few weeks I spent most of my time re – looking at computer architecture and in particular binary operation, logic gates and computer pipeline structure. In the first year, computer architecture was one subject that I found really difficult to be interested in as I preferred coding and working in software rather than being involved with hardware. I understood most of the concepts and how hardware can play an important role in all manners of computing but if I’m truly honest, I just wasn’t interested in it. I knew that this module would be different as we would be working on the PSPs and so that changed my attitude towards hardware and eventually console development became my favorite module in the second semester.

June 17, 2009

Module : Game Development Techniques Part 1

Game development techniques was introduced in the first semester of the second year at Derby university. The focus of this module was on introducing us fundamental issues in game development and state of the art development technologies for modern game production. Although formal teaching started from when we went back in September, we was given a heads up form our lecturers over the summer to start looking at the modding community and get some practice in using Epic game’s UT2004 editor. Later on in the summer we were sent a description on what we were going to be doing in terms of course work and what we should have done for when we start back.

The Project

The assignment for this module was to create a total conversion based on one of Enid Blyton’s Famous Five books. In 12 weeks we were required to have a full finished 10 minute demo based on what we had learned from the module playable via a Xbox 360 controller on the PC. We were required to make the level in the Unreal level editor and then using unreal script, make a new framework for which the new game code we wrote would work with. We was given certain assets but was required to create some of our own as part of the requirement such as at least one model made on 3DS max and include a picture of yourself somewhere in the game. The game itself was to be based around a chapter from one of the books which the player would then play out. Slight alterations to the story were allowed to an extend, however it had to follow the book with relative accuracy.

Why the famous five? was the first thing I thought ” Because it’s my choice ” was the explination from my lecturer.

So the first thing to do was to start painfully reading some of the books and even whatching video adaptations on the Internet to decide which book and chapter I was going to base my game around. At this time I started to research the action/adventure genre to look for inspiration and ideas I could use as mechanics, as well as writing up a design document. My aim was to have a design document written before I went back as I knew this would be a time consuming module and I would need every minute to work on it. Once I had written up the document and decided on a book and chapter I started to get to grips with Unreal Ed by following on-line tutorials at www.3DBuzz.com and slowly building up a few maps of my own before I went back to university. I started by following the tutorials before branching off and trying to make my own levels including the Gaurdian level from Halo 3. At first I found the Editor to be really useful and had loads of fun toying around with levels and making little maps of my own but eventually I found some really, really odd bugs with it that absolutly drove me mad. For some unknown reasons Unreal Ed loves to crash and close itself on an almost regular basis often deleting or undoing hours of work. I know that saving work often does help, but as ever there comes a time when you forget. Luckily I found this out before starting my main project and so was prepared for it when starting my assignment (not to say it I didn’t loose any work because I did).

Module : Introduction to 3D Graphics – Part 2

The Camera

The next stage required me to implement a virtual camera into my project so that I could view objects in my renderd scenes. For the time being however, I was just concerned with getting my camera’s methods and data fields implemented. Just to point out, these tasks were being assigned on a weekly basis meaning that there was little time to really take in what was being learned making this project took up a lot of time outside of class time. The camera implementation was one of the most difficult concepts for me to grasp and implement correctly. I fell behind during this stage as I tried various ways of trying to get a working camera but learned some important lessons on how to approach writing code and methodologies used to make coding easier.

Usually I would just come up with a bit of pseudo code on paper and start whacking in all sorts of variables and parameters without really thinking about how code communicates with each other. What I started to do at this point was to use a system that would draw out how classes and object instances would communicate to each other (what would later be taught as case statements and UML diagrams). Although people may look at it as a way of wasting time by writing out code on paper, I find it helpful to be able to see how objects and methods should link together on paper. I really like being able to carry on thinking about how to solve a problem whilst being able to take a break away from starring at a computer screen and now use this approach whenever I’m writing code even if its only a small amount. Burn out is a killer when trying to meet deadlines and bang code out in the shortest amount of time and most of the time I end up re – writting naff code and not using the hundreds of floats I declared at the start of the night.

So for writing the camera class I had to think about what sort of functionality and data I would be working with and ways of implementing them. I had the tools already implemented as I had full Matrix and Vector functionality from the classes I had written and tested in the previous 2 weeks and so it was just a case now of getting the camera to make use of them. I started with the header file and set up a default constructor and destructor and then thought about the matrices I would need. I needed matrices to represent the screen, perspective and camera transforms which would be used in the local methods for creating each one. I would also need rotation methods for the X, Y, Z axis methods and later added a GetCamPos method which returned a Vector representing the position of the camera.

Camera transforms, rotations and references

When setting up a virtual camera there are a various important transformations that are required to correctly render images on screen. These transformations are based around moving from different co – ordinate spaces each being a different representation of my vertices data. The main four spaces I made use of were Modelling, World, View and Screen space and considered these when writing my renderer and constructing its pipeline. Modelling space was simply the co – ordinate system used local to the model and relative to the centre of the model i.e. the X, Y and Z at the centre of the model is 0, 0, 0. World space is responsible for translations scaling and rotations and allows different models with the same Model space co – ordinates to be set up in a larger space. View space is what I was really interested in at this point as it represents where my camera will be and how models in world space will be relative to it. Finally Screen space is simply pixel space on the screen and the transformation from 3D to 2D also known as projection. These four spaces are controlled by 3 matrices the World, View and Projection matrix. These are the rights of passage from space to space and are responsible for controlling the vertices data for the models.

So the first method I set up was to build my perspective transform which contained a matrix that took a float value and measured a field of view based on what was passed into it. This value was then passed along each axis to correctly set up the camera’s view in relation to the objects on screen. I then created a build screen transform method that simply created a screen based on an X and Y value passed in. The last transform method I needed was to build a camera transformwhich created me a view matrix. This was one of the hardest things for me to understand an get working correctly as I kept messing up the translation of the camera position and the order in which rotations were multiplied. It was also at this stage I realised I had a mistake in my matrix class that was responsible for screwing up my entire renders. In my Matrix multiplication method, I had copy and pasted lines of code and accidently changed a “x” to a “+” giving me some weird results and so learned another important lesson to avoid copy and paste when coding late at night!

The parameters for the rotation methods were simple a float amount and a matrix reference. The float amount was simply the value passed into the matrix column to rotate by. The matrix reference simply stores the results that are then used later. Another new technique I learned at this point to was how to make use of references when referring to values or data types.

A reference provides another name for a variable. Whatever is done to the reference is done to the variable it refers to. The best description I was given was from a fellow class mate who suggested thinking about references as a nickname for a variable i.e. another name that a variable goes by. So in my parameters to my methods, I pass a reference to a matrix which I then define and save the working calculations in. Make sense? check out the code below –

void Camera ::RotateX(float rotX, Matrix & resultMat)

{

// Create Matrix for X rotation a.k.a -roll

resultMat = Matrix( 1, 0, 0, 0

0, cos(rotX), -sin(rotX), 0

0, sin(rotX), cos(rotX), 0

0, 0, 0, 1 );

}

The variable reference resultMat then has the values saved into it and is recognised as a matrix. This makes things very easy and convenient when saving data and variables as well as not needing to copy large data types. References can be used in a variety of ways and have many advantages when deciding how to construct arguments. I had used References before but not in a way in which they were passed by parameter or as arguments. This gave me a nice introduction to using them and taught me an important lesson for passing data chunks.